Let me begin by denying having any expertise on medical marijuana. I will also point out that the term "junk science" has been badly overused, and has been used to cast aspersions on evidence that really isn't junk science at all, including evidence for climate change and evolution. So, for anyone who thinks I am applying this label to the study of which I am about to tell you because I'm a right-wing social conservative, that's not so. It's just that I know real junk science when I see it.

I became aware of this study when a friend posted on Facebook a link to an article from a blog called "Smell the Truth," whose subtitle says it covers "medical marijuana news." So, when I read the article from that blog, which put a very positive spin on the findings of the study it described, I thought I should read the original paper to see for myself. And then I saw the title of the journal in which the study was published, Clinical Gastroenterology and Hepatology. Hmmm. Not a journal I routinely read, but the last time I read a paper published there, the study was so badly designed as to be laughable. But let me keep an open mind. Surely the authors of the paper, and the editors of the journal, deserve that. And no doubt the lead author's mother thought it was an excellent paper.

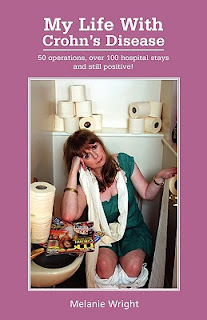

The study investigators wanted to know whether smoking marijuana might help patients with Crohn's disease. This is an inflammatory disease of the bowel that tends to be a chronic, progressive disease that causes much misery. It is challenging to control with medical therapy and not infrequently requires surgery to treat complications.

The study, conducted in Israel, took a group of patients with Crohn's Disease and randomized them to marijuana or placebo. The patients were then followed over the next ten weeks, and their symptoms were quantified by the Crohn's Disease Activity Index (CDAI), a standardized research tool used to assess the effect of approaches to treatment.

The authors defined a CDAI score <150 as "complete remission." Without getting into the details of the scoring system, I should just point out that the term "complete remission" is misleading, as much of the score depends on symptoms that can wax and wane over a short period of time, while other elements of the score (4 of the 8 elements) relate to complications that occur over a longer time.

So it is possible to achieve substantial reductions in the CDAI through short-term symptomatic relief without any real change in underlying disease activity. To call such an effect "complete remission," then, may give us the wrong impression of what is happening.

It would be more accurate to describe the effect as what it is, namely short-term symptomatic relief.

Of course the "Smell the Truth" blog trumpeted the phrase "complete remission" and said the treatment "performed like a champ."

But let us move on to the design of the study, so I can skewer that before I tell you about the results.

The number of patients was a mere 21. This is very small - in fact, inexplicably so. This is because the smaller the study, the less likely it is to find significant differences between the treatment and control groups (the "control group" consisting of patients who did not receive the treatment, typically receiving a placebo, or "sham" treatment, instead). I will repeat my favorite example of this. Everyone understands that, when you flip a coin, it has an equal chance of coming up heads or tails. If you flip it twice, however, it could easily come up heads twice or tails twice, and if you didn't understand the effect of sample size, you could easily be misled about the probabilities. If you flip it 1,000 times, you will get pretty close to 500 each heads and tails - and learn the truth.

Anyone who engages in scientific research should understand the effect of sample size on the value of the results and can do something biostatisticians call a "power calculation" to figure out how big the study has to be to yield meaningful results. Assuming these researchers were not clueless, and assuming they had access to someone who knew enough stats to do a power calculation, the only explanation for doing such a small study is that they intended it to be a very preliminary pilot study, a sort of "proof of concept" trial. But that isn't how it was reported in the journal.

So the small sample size is major flaw #1. Often a single major flaw is enough to render a study useless in telling us anything worth knowing. But there's more.

When one does a study to test the effects of a treatment, the best design is what's called a randomized, controlled trial (or RCT), and an RCT is best done in a way that is "placebo-controlled" and "blinded." Ideally it should be "double-blind," which means that neither the subjects (patients) nor investigators know which subjects got active treatment and which got placebo. Often, from the subjects' perspective, placebo control and blinding are closely intertwined. It is easy to see the challenge of placebo control and blinding in this study.

Subjects randomly assigned to the active treatment group smoked marijuana cigarettes containing a standardized amount of tetrahydrocannabinol (THC, the important active ingredient). The control subjects smoked "sham" cigarettes made from cannabis flowers from which the THC had been extracted.

Now, remember, in order for the desired effects of blinding and placebo control to operate, the subjects in each group must not be able to tell whether they are receiving active drug or placebo. Imagine you are taking a medicine to treat depression, an illness with mostly subjective symptoms. If you are in a study, and you don't know whether you are taking active drug or placebo, you could easily be taking placebo yet experience improvement because you think maybe it's the active drug: the "placebo effect," which is very real and often surprisingly powerful.

So how could blinding and the placebo effect work in this trial? Let us assume, giving the authors maximum benefit of the doubt, that they recruited only subjects who had never smoked marijuana and who would therefore be unfamiliar, at least from personal experience, with its effects. (The paper didn't say that, and so it probably isn't true, but I'm trying to help them out here.) Even then, subjects probably all had at least some idea what marijuana is supposed to do and could very easily tell when they smoked their cigarettes, real or sham, which they were. So there could not possibly have been any real placebo control or blinding effect. And that is major flaw #2.

The remaining major flaw, which is also related to sample size, is the importance of randomization. The value of randomization is that it helps to minimize the chance that the subjects who've been enrolled are sufficiently different, between the two groups - in some way that the investigators may not even have realized could matter - as to affect the results. For example, let's go back to testing the new medicine for depression. Suppose we look at the two groups and find that the active treatment group did better than the placebo group. But then suppose we go back and ask all the subjects whether, during the study, they happened to acquire a new boyfriend or girlfriend, and it just so happens that far more in the active treatment group than in the control group say yes. We would then have no idea whether they felt better because of the new relationship or the treatment, or whether the treatment helped them get into a new relationship. This is what researchers call a "confounder." The effect of randomization is to minimize the role of confounders, and the larger the sample size, the more effective randomization is in accomplishing this.

So now that you know the three major flaws, let's look at the results. Of the 11 subjects in the cannabis group, 5 achieved "complete remission," meaning short-term symptomatic improvement, while this was achieved for only 1 of 10 in the control group. Looks like a big difference, right? Well, because the sample size is so small, statistical calculations tell us there is a 43% likelihood this difference was due purely to random chance! Thus, for the primary outcome measure, the main thing investigators were testing for, they found no difference with active treatment. The most positive spin you can put on this, while maintaining scientific objectivity, is that there was a "trend" toward improvement that might turn out to be real in a larger study. To their credit, part of their conclusion says a larger study should be done, and a "non-inhaled" form of the drug should be tested. But that did not deter them from spinning the results as positive: cannabis "produced significant clinical benefits ... without side effects."

That last phrase didn't quite get me to laugh out loud, but I did find it quite amusing. "Without side effects?" That is nothing short of hilarious. Any effect of a drug that is not the intended effect is, by definition, a side effect. And so all of the effects of smoking marijuana that were not related to ameliorating the symptoms of Crohn's Disease were side effects. Anyone who knows even a little about marijuana knows that users experience several completely unrelated effects. These may not have been side effects that caused subjects distress or made them decide to drop out of the study. But they were most definitely side effects.

So what does this study actually tell us? We now know that researchers in Israel have studied medical marijuana for Crohn's Disease, and some patients experienced short-term symptomatic improvement. This was only a trend, and the sample size was so small that the difference could have been due purely to random chance and must be tested in a much larger study before we will know anything with any confidence. Finally, this study cannot tell us anything about longer-term effects of marijuana on Crohn's patients, either positive or negative. There is no known medical reason to suspect it has any effect on the pathophysiology of the disease, and so it would not be expected to have any effect on the frequency of exacerbations or development of complications.

This study will be touted by advocates for medical marijuana and by those who support decriminalization or complete legalization. And now you know just what the study tells us - and what it doesn't.

Good artcile, but it would be better if in future you can share more about this subject. Keep posting.

ReplyDeletemmjherb